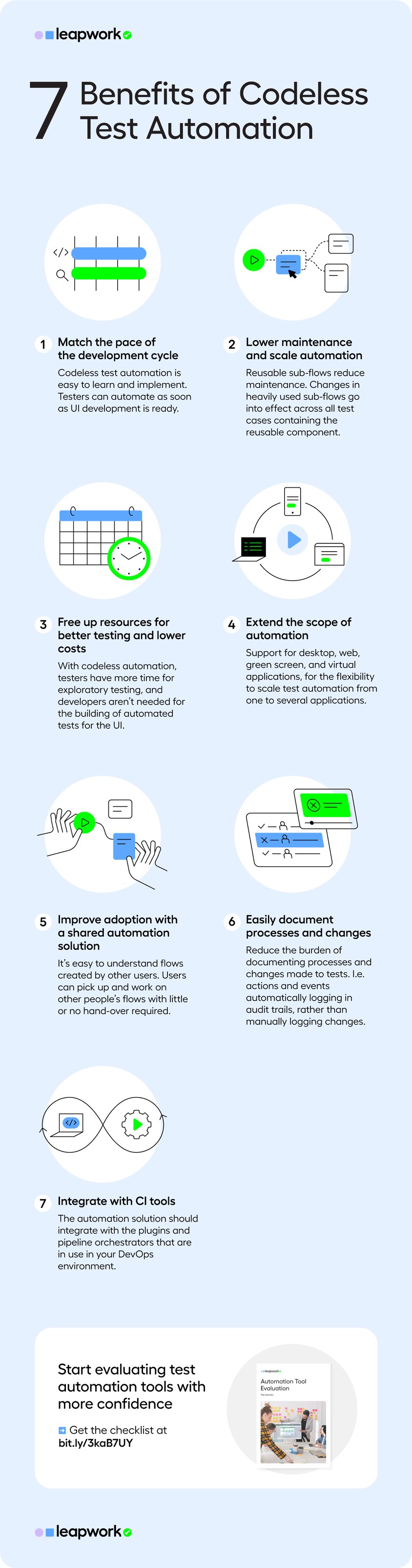

7 Benefits of Codeless Test Automation

So far, automation frameworks and tools have dictated that testers should waste time programming. Codeless test automation is a much more efficient way for testers to work. Here's why.

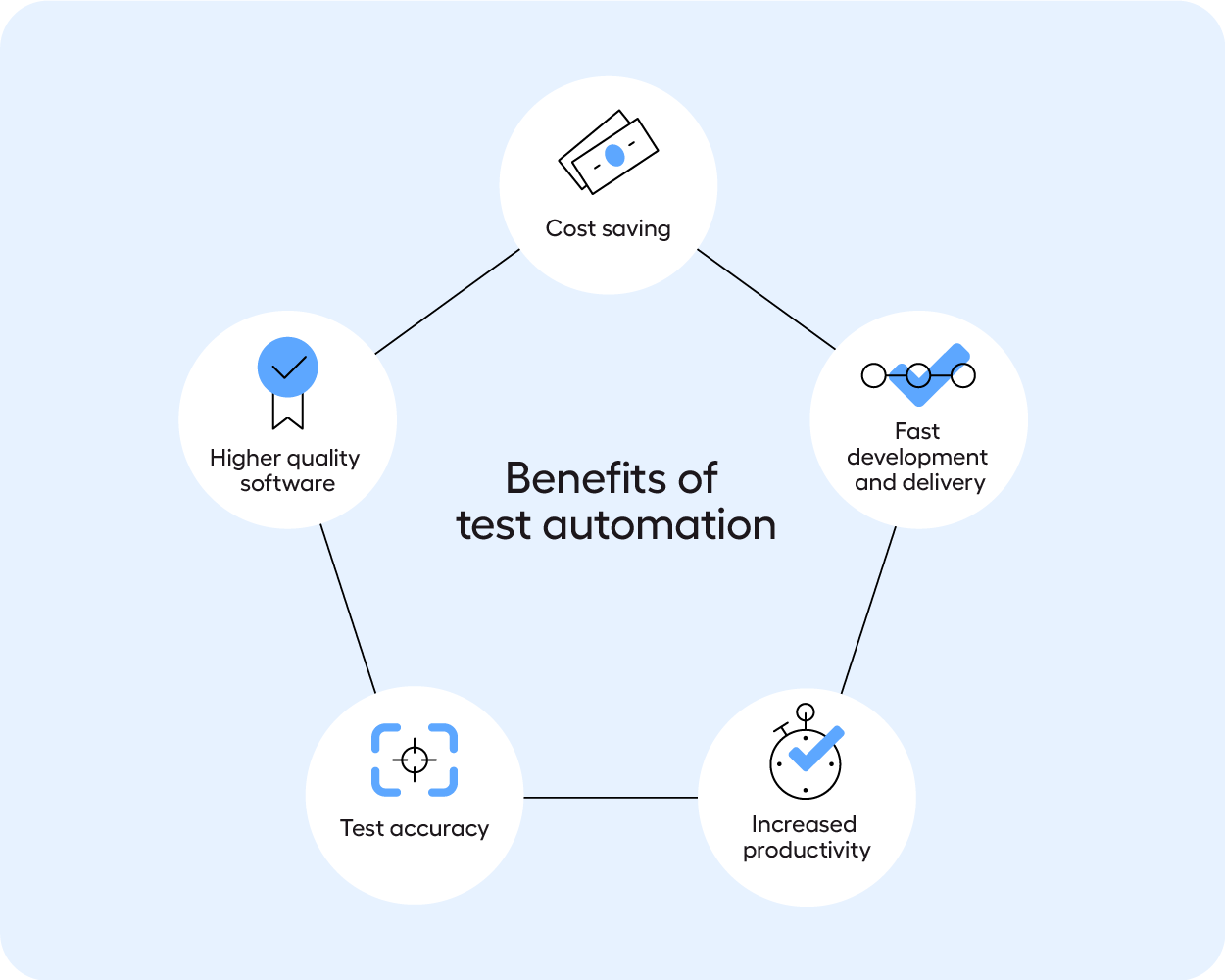

Test automation can bring general benefits, like reducing test related costs, increasing productivity, speeding up delivery and improving the accuracy of tests. But not all vendors can help you achieve these broad benefits.

This is because most test automation tools are developed with a programming mindset. In much the same way that operating systems required programming before Windows 95 changed the game with their visual interface.

The problem is, testers are not programmers. Adopting the attitude that testers must learn how to code is problematic. With that mindset, we ignore the fact that coding takes many years of practice to master - just like any other craft. What's more, coding takes time away from testers’ primary function. As mentioned earlier, testers' invaluable skill set lies elsewhere. Nonetheless, “Get on board or get fired” is a prevailing sentiment.

The paradox is obvious: Test automation, which was supposed to free up resources for human testers, brings with it an array of new costly tasks. Below are seven reasons why codeless UI automation is the way to go for automating tests.

1. Match the pace of the development cycle

When automation is designed with visual UI workflows, all code is generated under the hood making automation easy to learn and implement.

A tester can start automating cases as soon as UI development is completed without wasting time going through complex coding practices and frameworks.

This makes it easier to match the pace of the development cycle with continuous integration.

2. Lower maintenance and scale automation

Automating tests with visual UI workflows, inherently following current business rules and best practices, make it easy to scale and maintain test automation.

Automation with Leapwork relies on native identification of the objects used in test cases. This means that for the most part, it’s not necessary to adjust the automation flows every time the system under test changes.

What’s more, automated flows can be combined into reusable components to be used as sub-flows across test cases. If changes are made to heavily re-used sub-flows, these changes will go into effect across all test cases containing the component.

3. Free up resources for better testing and lower costs

When testers don’t have to spend all their time trying to automate their regression tests by writing code, they have more time for exploratory testing of the application in which they are experts.

What’s more, with codeless automation there’s no need to involve developers in the creation of UI tests, which is more cost-effective.

4. Extend the scope of automation

Leapwork’s codeless automation platform is built to support multiple types of applications, including web, desktop, and virtual applications.

More specifically, this means that automated tests can involve multiple interfaces across applications as part – or beyond – the given project.

With this flexibility, it’s easy to scale the extent of automation from one to several applications, and especially, to include testing the integration between projects.

5. Improve adoption with a shared automation solution

Unlike code-based custom frameworks, the Leapwork Automation Platform can be used as an all-in-one solution for automation needs across an organization. By combining a shared solution with a Center of Excellence setup, it becomes easy to share knowledge, best practices, and test cases across projects for collaboration. Sharing automation flows and components across projects reduce the time required to initiate new automation projects.

With a UI-based framework, it’s easy to understand flows created by other users. This comes with the advantage that users can pick up and work on other people’s flows with little or no hand-over required. If a given test case is not following best practices, it’s easy to change the flow by visually interpreting the steps and actions involved in the UI of the application under test.

Code-based frameworks don’t come with the resources and support that a dedicated automation solution does. This ensures a long life-time for the chosen solution in the organization and makes it non-dependent on specific personnel.

6. Easily document processes and changes

UI workflows work both as a visual description of a process and as a documentation of how that process is executed. With the Leapwork Automation Platform, automated tests are documented with video and logs. All actions and events are recorded in audit trails.

7. Integrate with CI tools

Your CI/CD pipeline should dictate the test automation solution and not the other way around. Leapwork comes with a fully documented REST API, native plugins for most common DevOps tools and pipeline orchestrators. You can even build your own custom integrations with third-party systems.

Conclusion

In summary, with codeless UI automation, there’s no need to understand testing frameworks or the underlying technology of an application in order to be able to automate tests.

Suddenly, the road to success with test automation is within reach. An automation tool built on this visual approach empowers non-developers. It enables them to create, maintain, and execute test automation themselves without having to learn how to code.

Download the test automation evaluation checklist to take your knowledge a step further. You'll be able to get started with your evaluation, and understand what business look for when searching for an easy to use, cross technology solution.